Homoscedasticity: Difference between revisions

m (Edited picture, Edited lniks) |

mNo edit summary |

||

| Line 4: | Line 4: | ||

Serious violations in homoscedasticity (assuming a distribution of data is homoscedastic when in actuality it is heteroscedastic) result in underemphasizing the [[Pearson product-moment correlation coefficient | Pearson coefficient]]. Assuming homoscedasticity assumes that variance is fixed throughout a distribution. | Serious violations in homoscedasticity (assuming a distribution of data is homoscedastic when in actuality it is heteroscedastic) result in underemphasizing the [[Pearson product-moment correlation coefficient | Pearson coefficient]]. Assuming homoscedasticity assumes that variance is fixed throughout a distribution. | ||

==Assumptions of a Regression Model== | ==Assumptions of a Regression Model== | ||

In simple linear regression analysis, one assumption of the fitted model is that the standard deviation of the error terms are constant and does not depend on the x-value. Consequently, each probability distributions for each y (response variable) has the same standard deviation regardless of the x-value (predictor). In short, this assumption is | In simple linear regression analysis, one assumption of the fitted model is that the standard deviation of the error terms are constant and does not depend on the x-value. Consequently, each probability distributions for each y (response variable) has the same standard deviation regardless of the x-value (predictor). In short, this assumption is homoscedasticity. | ||

==Testing== | ==Testing== | ||

Revision as of 12:19, 20 May 2016

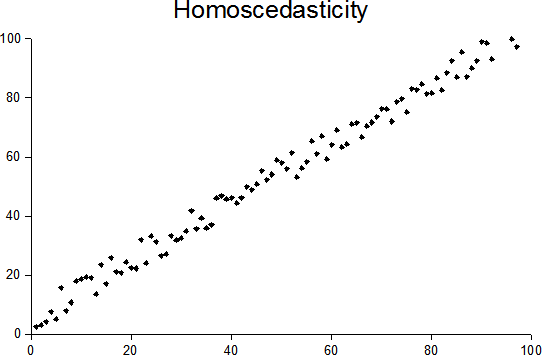

In statistics, a sequence or a vector of random variables is homoscedastic if all random variables in the sequence or vector have the same finite variance. This is also known as homogeneity of variance. The complement is called heteroscedasticity. (Note: The alternative spelling homo- or heteroskedasticity is equally correct and is also used frequently.) As described by Joshua Isaac Walters, "the assumption of homoscedasticity simplifies mathematical and computational treatment and usually leads to adequate estimation results (e.g. in data mining) even if the assumption is not true."

Serious violations in homoscedasticity (assuming a distribution of data is homoscedastic when in actuality it is heteroscedastic) result in underemphasizing the Pearson coefficient. Assuming homoscedasticity assumes that variance is fixed throughout a distribution.

Assumptions of a Regression Model

In simple linear regression analysis, one assumption of the fitted model is that the standard deviation of the error terms are constant and does not depend on the x-value. Consequently, each probability distributions for each y (response variable) has the same standard deviation regardless of the x-value (predictor). In short, this assumption is homoscedasticity.

Testing

Residuals can be tested for homoscedasticity using the Breusch-Pagan test, which regresses square residuals to independent variables. The BP test is sensitive to normality so for general purpose the Koenkar-Basset or generalized Breusch-Pagan test statistic is used. For testing for groupwise heteroscedasticity, the Goldfeld-Quandt test is needed.